Madhavan Mukund

Teaching

Advanced Mining Learning,

Aug-Nov 2019

Assignment 2: Reinforcement Learning

15 November, 2019

Due 30 November 7 December, 2019

The Task

Racetrack (Exercise 5.12, Sutto and Barton)

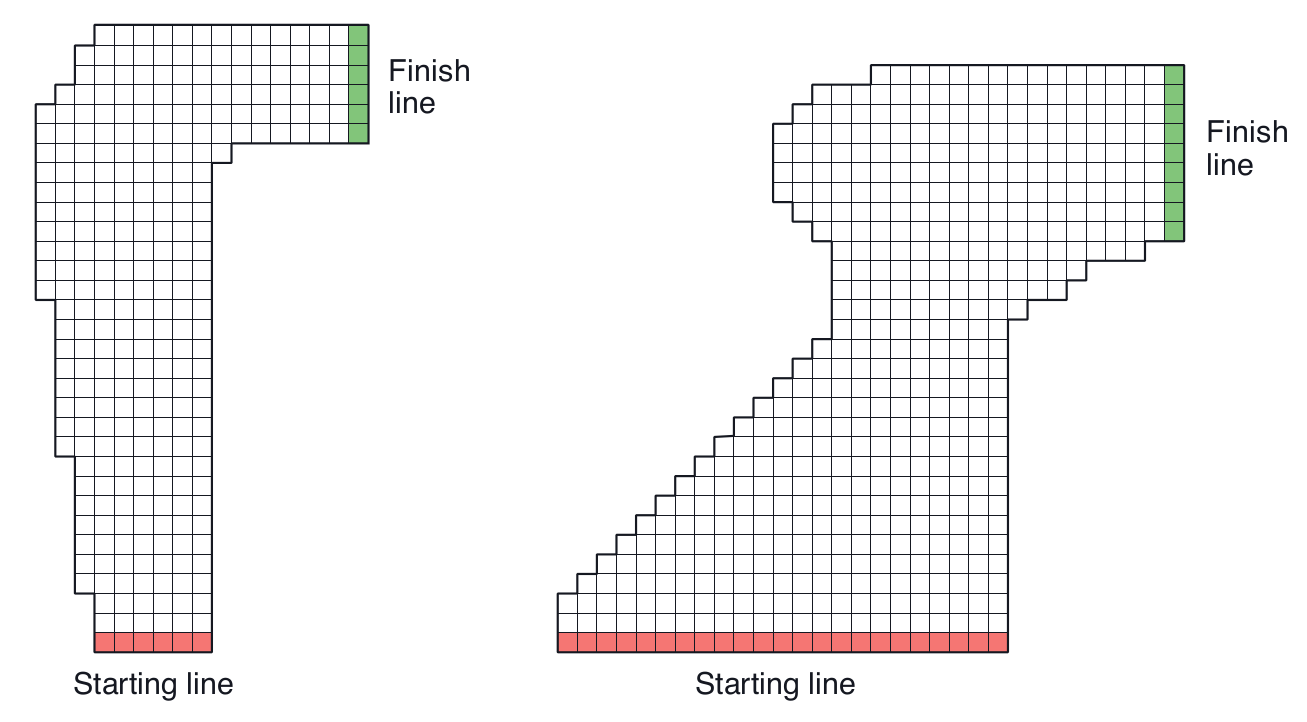

Consider driving a race car around a turn like those shown in the figures below. You want to go as fast as possible, but not so fast as to run off the track.

In our simplified racetrack, the car is at one of a discrete set of grid positions, the cells in the diagram. The velocity is also discrete, a number of grid cells moved horizontally and vertically per time step.

The actions are increments to the velocity components. Each may be changed by +1, -1, or 0 in each step, for a total of nine (3 × 3) actions. Both velocity components are restricted to be nonnegative and less than 5, and they cannot both be zero except at the starting line.

Each episode begins in one of the randomly selected start states with both velocity components zero and ends when the car crosses the finish line. The rewards are -1 for each step until the car crosses the finish line. If the car hits the track boundary, it is moved back to a random position on the starting line, both velocity components are reduced to zero, and the episode continues.

Before updating the car's location at each time step, check to see if the projected path of the car intersects the track boundary. If it intersects the finish line, the episode ends; if it intersects anywhere else, the car is considered to have hit the track boundary and is sent back to the starting line.

Apply an on-policy Monte Carlo control method to this task to compute the optimal policy from each starting state. Use ε-greedy strategies with different values of ε and learning rate α

Repeat the exercise using a TD(0) method (either SARSA or Q-learning). Once again, use ε-greedy strategies with different values of ε and learning rate α

Solving the Task

-

Here are text representations of the two examples above: Racetrack 1, Racetrack 2. In the encoding, each cell of the racetrack is encoded by a single letter: S for start, F for finish, O for an open cell within the racetrack, B for a cell outside the boundary.

You can work with this representation or any other representation. Try out both these examples and at least one more example of your own.

-

Generate plots showing the convergence rate for the two approaches for different values of ε and α, as well as a plot showing a comparison between the two approaches.

-

Use Google Colab to write your code and associated documentation.

-

Submit a link to your Colab notebook.